Distortions in Science Communication

How can ML and NLP help maintain trust in science?

The first time I remember seeing people wearing surgical masks in public was when I went to Japan a few years ago. Any time I was out in public or on the train, sure enough there would be a handful of people donning masks, both of the disposable and reusable cloth kinds. At the time I was pretty impressed by the commitment to hygiene and good will this demonstrated, but also blew it off as a bit superfluous.

Without the pandemic I would have likely gone the rest of my life not thinking too much about surgical face masks again. For someone like me, with no background in public health, I pretty much had to defer to authorities who knew better on this topic when suddenly everyone was talking about masking and ventilation and r rates. I knew pretty much nothing at the time, and wasn’t planning to go read a bunch of scientific literature on it – I just relied on what I heard about and read in news and on Twitter (and what the Danish government recommended). Basically, I was counting on people who I’d hoped knew better to get me useful information.

Survey data shows that in general, with science, I’m not alone in this regard. 2017 Pew research survey data shows that the majority of Americans get their science news from general news outlets. Few see them as accurate.

Since then, engagement with science news has increased, while at the same time, trust in actual scientists has decreased.

People reading more about science is encouraging, but why are less and less people finding scientists trustworthy?

It is known that the way that science is presented in the news has an impact on people's trust in science itself, as well as on their health and behavior [1,2,3,4]. The news is the primary vehicle through which people learn about science. So I would argue that if we care about science as an institution, as well as keeping people safe and informed, we should care about how science is communicated. How do you effectively deliver the best and most accurate scientific information to people? How do you inform people without overwhelming them? How do you get the average person to be interested in science? How do you maintain trust in science?

Let’s take a look at the science communication process. First, some nomenclature: I’m going to differentiate between scholarly writing, science reporting, and science communication. Scholarly writing includes primary source original science papers, ones that are written by and best understood by experts; science reporting includes any secondary source about a piece of scholarly writing, including press releases, news articles, and general online discourse e.g. tweets, the authors of which can be anyone (scientists, reporters, lay people, etc.); science communication is a broad term capturing both.

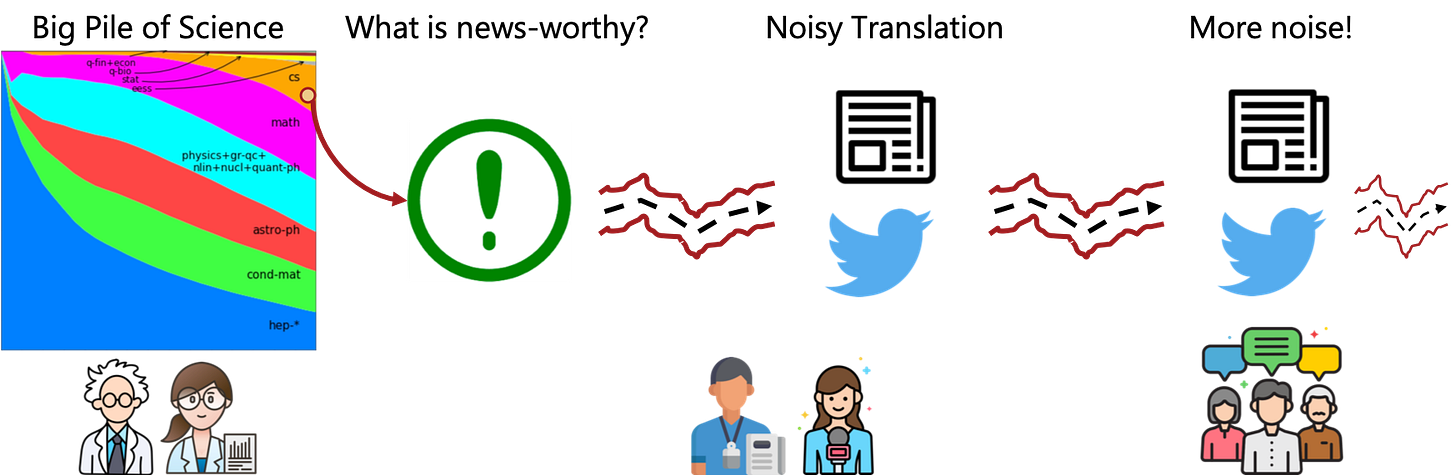

The science communication pipeline can roughly be broken down into the the stages below:

(Science pile graphic from https://info.arxiv.org/help/stats/2021_by_area/index.html)

Basically, we start with this big pile of scholarly writing, which is expanding without end every day. Clearly, no one is going to get around to reporting on every single new piece of science, so the first step is article selection – what is news-worthy? There are a lot of factors here: what is timely, what is novel, what is personally interesting to a reporter, how many people are affected, the list goes on [5]. The reporting of masking spiking during the pandemic makes sense in this framework – timeliness due to the pandemic and far reach because it affects basically everyone.

Now that a piece of science has been identified as worth reporting on, a science reporter needs to translate this to terms which lay people understand. They also need to be engaging and get people interested in reading their piece of writing. This is where things can start to go wrong – lots of competing incentives and a need to simplify the language. We’re starting to introduce noise.

Then there’s another translation step – from both primary and secondary sources to public discussion and dissemination e.g. through social media. Now we’re in an environment where one potentially has only 240 characters to discuss extremely complex scientific topics, where extreme and emotional language wins over nuanced and informative discussion. And the further removed the reporting gets from the original source, the more noise is introduced, like a really bad game of telephone.

This is exemplified by the following. I’m going to pick on some people who are well meaning; this is mostly demonstrative of the process of distortion which can occur even (and potentially most perniciously) in people with good intentions. Keeping with the masking theme, we’ll use some tweets about this paper on the effectiveness of different forms of masking at preventing aerosol spread . Essentially, the authors perform a controlled simulation of aerosol release on several different types of face coverings (N95 respirator, medical grade procedure mask, a 3-ply cotton cloth face mask, bandana, and face shield) and report on their ability to block small aerosols. The tweets I’ll use which talk about this paper were found by searching for tweets on “face masks” on March 6, 2023 and taking a cursory look over the results.

First, we have a tweet about this paper from a highly popular (>150k followers) scientist:

While technically not inaccurate, and it’s great that the author includes the actual aerosol block rates, it is maybe misleading to say that ANY mask is better than none, since face shields are only 2% effective in their simulations. It is also important to know that the simulations in the paper “used a single cough volume, air flow profile, and aerosol size distribution” – a limited set of variables from which to generalize. This has important implications – yes the different face coverings studied in that paper are effective for reducing the dispersion of small aerosol particles, but the results don’t say anything about protection in the sense of protection against respiratory illness in real world situations where not everyone is masked and likely aren’t wearing masks properly, so more information is needed about these variables in order to better inform other behavior such as social distancing and hand hygiene (again I’m being nit-picky to illustrate a point, the author actually includes that tweet in a tweet-thread which contextualizes this a bit more).

When we take another step in the communication pipeline, we encounter greater distortion. In a tweet quote-tweeting the one above:

The paper makes no claims about the benefits of one-way masking. Also, significant has an extremely precise definition in the context of science (something that we in ML and NLP are extremely guilty of abusing as well). Maybe this tweet is true, but it isn’t fair to extrapolate this from the results of that paper.

I don’t think science reporters are (in general) acting in bad faith. However there are a lot of competing incentives when it comes to reporting on science topics. You want your writing to be compelling so that people are interested in reading it. If you’re trying to persuade people into a belief or worldview which (presumably, in your eyes) will benefit their lives, that will inform which rhetorical strategies and which evidence you use to get your point across. Cold, calculated, precise, sober, nuanced science reporting isn’t likely to win over readers. In some ways one could argue that isn’t even desirable if there is important information which needs to be disseminated to the public and we need that public to actually read, be interested in, and understand it (such as information on masking during the pandemic). But distorted scientific reporting also has a potential negative health impact, such as when people decide that the risks from a vaccine are not worth it because its effectiveness is presented as uncertain (some potential evidence for this in [6]). So how do we deal with it?

My PhD has focused on addressing the problem of distorted scientific communication using machine learning. We have focused on very specific changes (exaggeration [7]) as well as very broad non-specific changes [8]. There’s a big piece missing though: what exactly are the ways that science reporters tend to get the message wrong? This turns out to be a non-trivial question.

Many distortions can be categorized under the general headline of “sensationalism”. Two studies I like to go back to and which we used in our paper on exaggeration detection are the Sumner et al. 2014 causality paper and subsequent replication study by Bratton et al. 2019 in the health science domain [9,10]. In those studies, experts hand coded press releases, articles written by university and journal press offices for dissemination to journalists (and which end up constituting a large portion of the content in downstream news articles), along with their associated papers, along several dimensions including:

Causal claim strength (e.g. “X is associated with Y” vs. “X causes Y”)

Advice given

Inference from animals to humans i.e. generalization of results

The high level results were that health science press releases exaggerate causal claims 33% of the time, exaggerate advice 40% of the time, and generalize from animals to humans 36% of the time. This has a huge impact on how the news reports on science: when press releases exaggerate claim strength, news articles are 20x more likely to exaggerate. For advice, it’s 6.5x. And for species generalization, it’s 56x.

Another related distortion is in the certainty of findings, which my collaborators Jiaxin Pei and David Jurgens at University of Michigan have worked on [11] (for an excellent overview on certainty in science and NLP, take a look at their paper). Broadly: certainty is a multi-faceted aspect of communication, and can be modeled in multiple ways, but is generally coarsely viewed as “hedging”. It is an important aspect of science communication, as it has been observed that the level of certainty with which science is presented impacts people’s trust in science [2].

Cherry-picking is also prevalent, and related to news-values (why do some findings get covered and others don’t?) [12]. If news-values (i.e. news-worthiness) dictate which scientific discoveries are covered, it would make sense that journalists would emphasize these aspects in their reporting (e.g. novelty, timeliness, how many people the discovery affects, etc.). But cherry-picking can lead people to see only the highly positive aspects or highly negative aspects of science, washing out the nuance inherent in most scientific findings (I have yet to see a study or tool at least in ML and NLP where literally everything worked and was executed perfectly — ChatGPT is a great example of this). This has an impact if people start to see science as just a series of false promises.

Some scientists have also identified a lack of balanced viewpoints to be a distortion in science reporting, for example presenting the findings of a certain field in an overly optimistic light [13]. Related to cherry-picking, the problem here is not informing readers of caveats to findings or the potential risks of medical treatments, the classic “miracle cure for disease X discovered!” headline.

The now defunct healthnewsreview.org watchdog group has a great list of distortions to look out for when reading health news articles (which has fortunately been preserved here). The list includes several important medical science related issues such as do they report the funding source and conflicts of interest, do they adequately talk about the costs, benefits, risks, and novelty of the science, does the article include sensational language, etc. Many of the criteria listed there generalize to other fields (e.g. novelty, conflicts of interest, sensational language).

Something missing in the literature is really defining a comprehensive list of distortions in science writing which would generalize across fields (if someone else is aware of something like this, please let me know!). This is something which would be useful for building automated tools to flag distortions in science reporting: a taxonomy gives us an explicit set of classes which we can use to triage science reporting and help determine what to do about a given article. This could help with building automated skeptics, to guide the messaging given to a user reading a piece of science reporting (e.g. “Hey, it looks like this headline is exaggerated and this advice is inferred, but isn’t mentioned in the actual paper”).

I can take a crack at a list based on what I’m aware of and which I think would generalize across fields, but I could definitely be missing something:

Sensational language (e.g. “miracle cure!”, “ChatGPT has solved AGI”) [14,15,16,17]

Exaggerated claims (e.g. “gene X is associated with disease Y” → “gene X causes disease Y”) [9,10]

Changes in certainty (e.g. “gene X might cause disease Y” → “gene X causes disease Y”) [2]

Exaggerated advice (e.g. “based on their results, anyone who wants to lose weight should eat a bar of dark chocolate each night” ← maybe wishful thinking on my part) [9,10]

Overstating future predictions (e.g. “A new drug could treat type-2 diabetes” on a study which has only been done in mice, “...we may actually see AI emotionality by the end of the decade” in an article about ChatGPT) (see healthnewsreviews.org criteria)

Generalization of results (See the same article on type-2 diabetes above) [9,10]

Cherry-picked results (e.g. reporting on positive results and ignoring negative results) [12]

The list above is probably not comprehensive. It’s maybe going down a rabbit hole to try and enumerate all possible distortions in science communication; the ones I’ve identified here appear to be pretty salient and exist across disciplines. But it shouldn’t be impossible to develop an authoritative list: news-worthiness is also pretty subjective, but lots of work exists which very clearly define and enumerate the different overarching factors which contribute to the news-value of scientific findings [5,12,18,19].

This also goes back to my automated skeptics piece. People don’t have the time to become experts on every topic, and they certainly don’t have the time to go and read an entire body of scientific literature to validate scientific claims they see online. So it could be useful to have a tool which can do this for us and communicate caution and highlight differences between how science is presented online and the actual science. That way, people can have some protection from distorted science reporting, while science reporters can potentially have tools which help minimize those distortions in their writing.

The nice thing about this framework is it provides individual protection and can potentially be distributed at scale. It also isn’t dependent on platforms or science news outlets effectively implementing their own fact checking pipelines, though this would be great too.

I’ll end with some perspective: this is far from a new problem. A study by Tichenor et al. in 1970 [17] studied how lay people and scientists understood and viewed science through the media. Some of their key results were:

Lay people were asked to read science news articles (75 articles, 15-20 lay people) and to summarize what they thought the article said; then, scientists who had been quoted in those news articles were asked to evaluate the lay peoples’ summaries in terms of the accuracy of their individual statements on a 7-point likert scale; The average percentage of statements for a given article which had an accuracy score above a 4 was 62.5%, with a low of 30% and a high of 95%, indicating a large range of how well lay people understood the underlying science from a given news article.

Scientists were also asked to judge the frequency of certain criticisms in science news; the most noted criticisms were an overemphasis on novelty, omission of relevant information, and misleading headlines.

They also note that the concerns of scientists were more with “subjective” details such as interpretations of results and implications for social policies.

Scientists were also biased towards articles which quoted themselves – overall they saw 54.9% of articles as accurate, but 94.5% of articles which they were quoted in as accurate.

~~

Science communication in general is difficult, science reporting potentially even more difficult, but it’s extremely important for people’s health, behavior, and ultimately their trust in science. We have an opportunity in ML and NLP to help improve and understand science communication in order to foster a better informed public. It’s a fun topic to work on, and there is a lot of open territory in terms of building datasets, defining tasks, and developing new methods, so I’d encourage anyone interested to check out some of our existing work (shameless plug [7,8,11]) and think about making some contributions in this area.

References

[1] Kuru, Ozan, Dominik Stecula, Hang Lu, Yotam Ophir, Man-pui Sally Chan, Ken Winneg, Kathleen Hall Jamieson, and Dolores Albarracín. "The effects of scientific messages and narratives about vaccination." PLoS One 16, no. 3 (2021): e0248328.

[2] Fischhoff, Baruch. "Communicating uncertainty fulfilling the duty to inform." Issues in Science and Technology 28, no. 4 (2012): 63-70.

[3] Hart, P. Sol, and Lauren Feldman. "The impact of climate change–related imagery and text on public opinion and behavior change." Science Communication 38, no. 4 (2016): 415-441.

[4] Gustafson, Abel, and Ronald E. Rice. "The effects of uncertainty frames in three science communication topics." Science Communication 41, no. 6 (2019): 679-706.

[5] Badenschier, Franziska, and Holger Wormer. "Issue selection in science journalism: Towards a special theory of news values for science news?." In The sciences’ media connection–public communication and its repercussions, pp. 59-85. Dordrecht: Springer Netherlands, 2011.

[6] Featherstone, Jieyu Ding, and Jingwen Zhang. "Feeling angry: the effects of vaccine misinformation and refutational messages on negative emotions and vaccination attitude." Journal of Health Communication 25, no. 9 (2020): 692-702.

[7] Wright, Dustin, and Isabelle Augenstein. "Semi-Supervised Exaggeration Detection of Health Science Press Releases." In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pp. 10824-10836. 2021.

[8] Wright, Dustin, Jiaxin Pei, David Jurgens, and Isabelle Augenstein. "Modeling Information Change in Science Communication with Semantically Matched Paraphrases." In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pp. 1783-1807. 2022.

[9] Sumner, Petroc, Solveiga Vivian-Griffiths, Jacky Boivin, Andy Williams, Christos A. Venetis, Aimée Davies, Jack Ogden et al. "The association between exaggeration in health related science news and academic press releases: retrospective observational study." Bmj 349 (2014).

[10] Bratton, Luke, Rachel C. Adams, Aimée Challenger, Jacky Boivin, Lewis Bott, Christopher D. Chambers, and Petroc Sumner. "The association between exaggeration in health-related science news and academic press releases: a replication study." Wellcome open research 4 (2019).

[11] Pei, Jiaxin, and David Jurgens. "Measuring Sentence-Level and Aspect-Level (Un) certainty in Science Communications." In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. 2021.

[12] Molek-Kozakowska, Katarzyna. "Communicating environmental science beyond academia: Stylistic patterns of newsworthiness in popular science journalism." Discourse & Communication 11, no. 1 (2017): 69-88.

[13] Condit, Celeste. "Science reporting to the public: Does the message get twisted?." CMAJ 170, no. 9 (2004): 1415-1416.

[14] Saguy, Abigail C., and Rene Almeling. "Fat in the fire? Science, the news media, and the “obesity epidemic” 2." In Sociological Forum, vol. 23, no. 1, pp. 53-83. Oxford, UK: Blackwell Publishing Ltd, 2008.

[15] Ransohoff, David F., and Richard M. Ransohoff. "Sensationalism in the media: when scientists and journalists may be complicit collaborators." Effective clinical practice 4, no. 4 (2001).

[16] Dempster, Georgia, Georgina Sutherland, and Louise Keogh. "Scientific research in news media: a case study of misrepresentation, sensationalism and harmful recommendations." Journal of Science Communication 21, no. 1 (2022): A06.

[17] Tichenor, Phillip J., Clarice N. Olien, Annette Harrison, and George Donohue. "Mass communication systems and communication accuracy in science news reporting." Journalism Quarterly 47, no. 4 (1970): 673-683.

[18] Johnson, Kenneth G. "Dimensions of judgment of science news stories." Journalism Quarterly 40, no. 3 (1963): 315-322.

[19] Secko, David M., Elyse Amend, and Terrine Friday. "Four models of science journalism: A synthesis and practical assessment." Journalism Practice 7, no. 1 (2013): 62-80.